AI Companions - Profits vs Ethics

What 2 Shocking Outliers from A16Z's Top 100 AI Apps Report reveal

2 Outliers

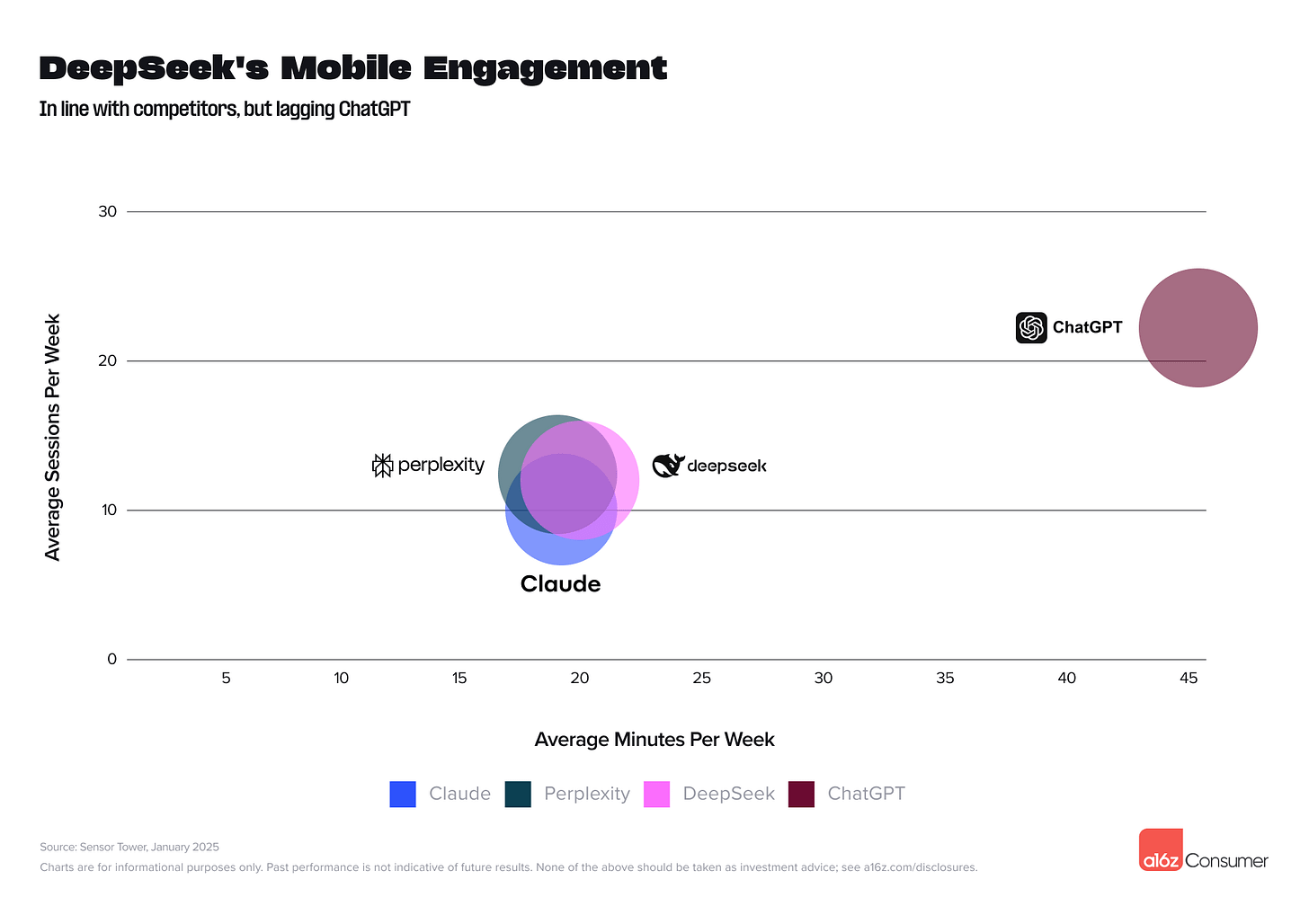

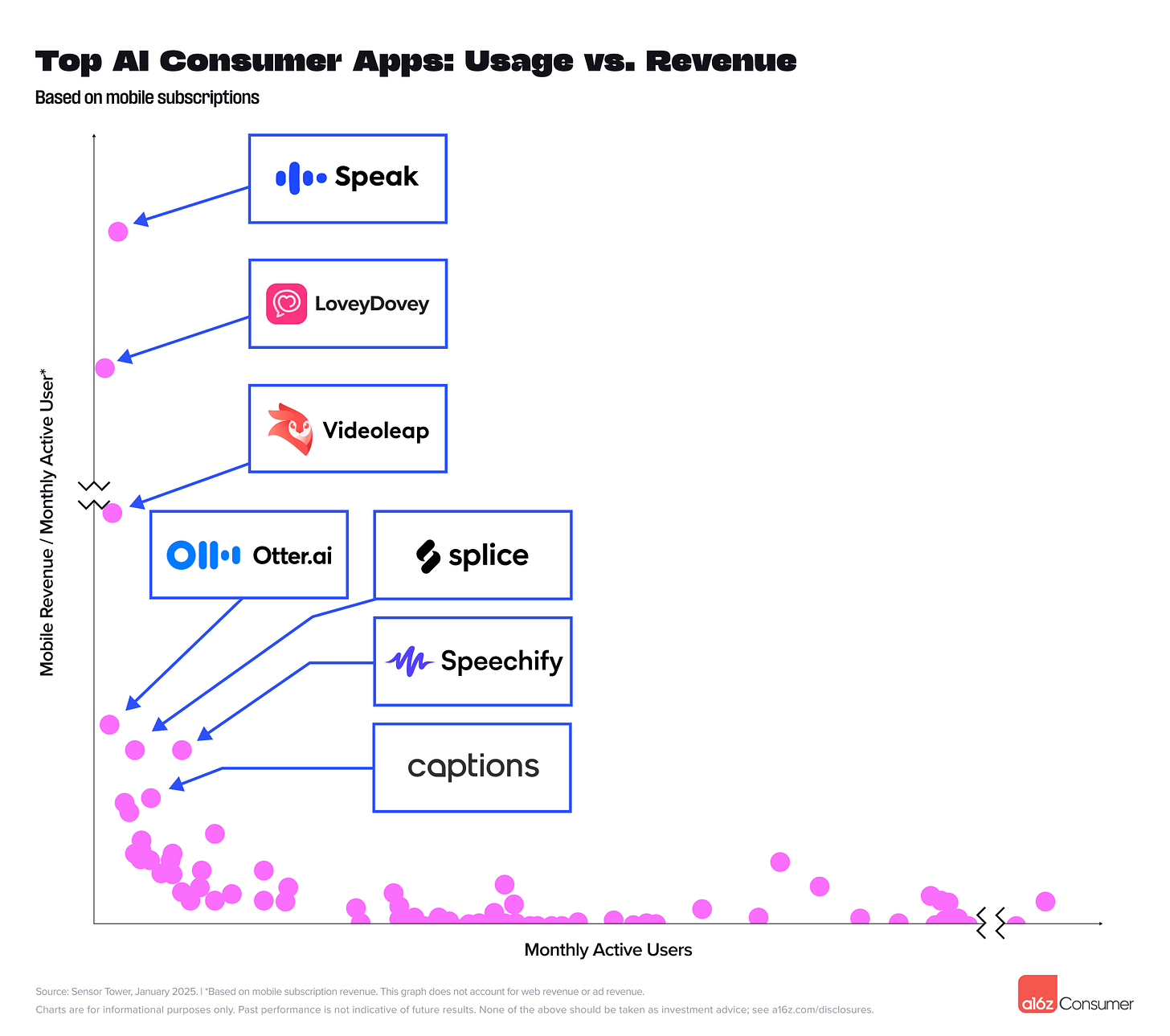

A16Z's latest Top 100 AI App report (March 7, 2025) highlights two interesting outlier data points: ChatGPT's user engagement far exceeds other LLMs like DeepSeek and Claude, while AI companion app LoveyDovey ranks second in revenue per user.

Do these data points suggest that AI companions generate higher user stickiness and willingness to pay compared to other use cases?

Duality of AI Companions

The duality of AI companions prompts reflection: while they provide round-the-clock emotional support for those who may have special circumstances, misuse can distort expectations of real relationships.

A research from Harvard Business School has provided compelling evidence that AI companions can effectively reduce loneliness.

Key Findings

AI companions successfully reduced loneliness on par with human interaction, outperforming passive activities like watching YouTube videos.

In a controlled experiment, interacting with an AI companion decreased loneliness scores by 7 points on a 100-point scale, which was comparable to conversing with a human.

A week-long study showed that daily interactions with an AI companion led to a 17-point decrease in loneliness scores, with most of the reduction occurring after the first day of use.

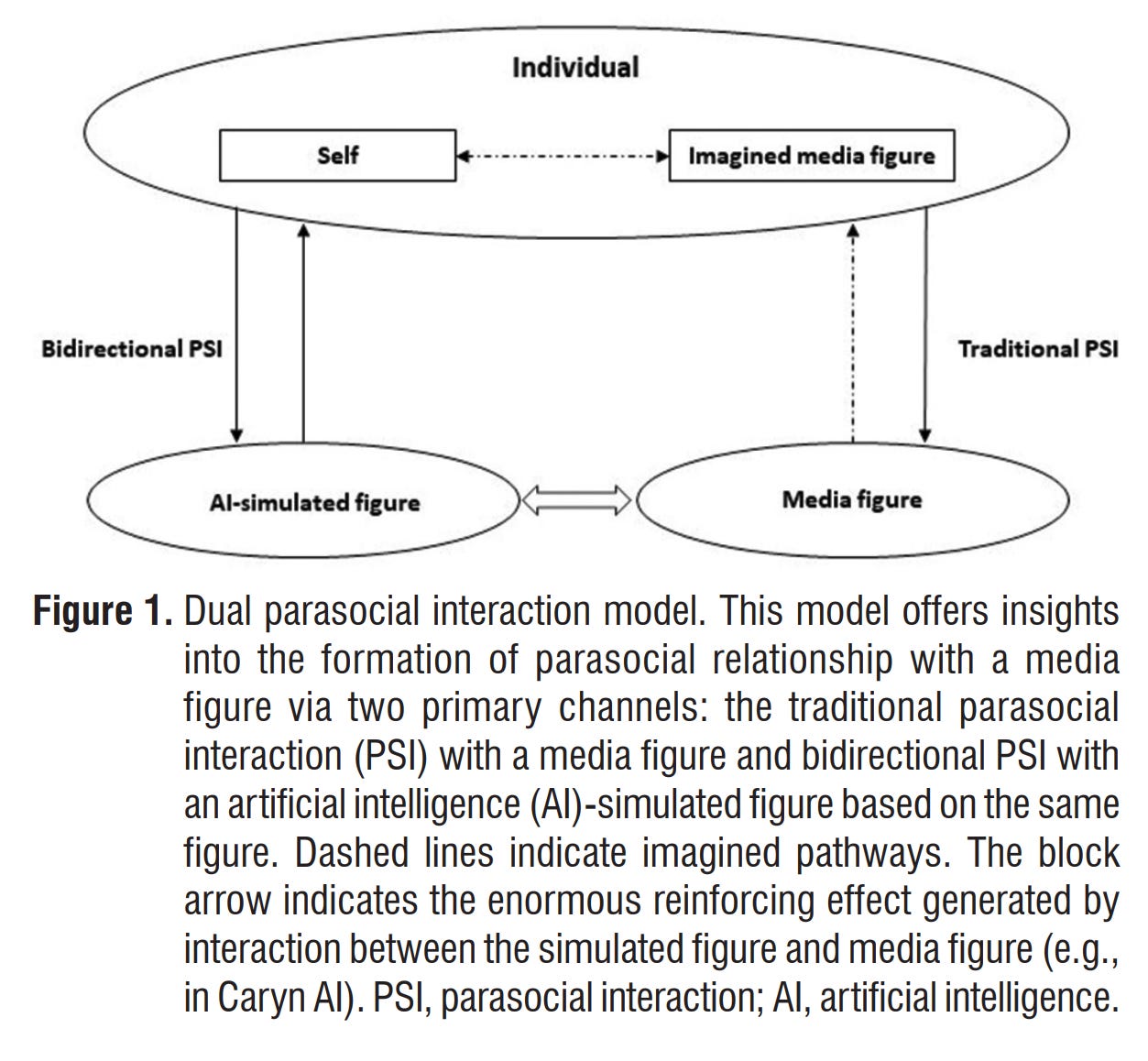

“Dual Parasocial Interaction” - an extreme but not isolated case

In September 2024, a startling case of ChatGPT addiction was published in a Taiwanese psychiatric journal:

A 50-year-old married mental health professional, while following a real-life celebrity, created five virtual romantic partners based on the same idol using ChatGPT. The bi-directional interaction between AI and reality formed a reinforcing cycle of dependency. This ultimately led to unprecedented obsession with the real celebrity, resulting in severe depression and emotional dependence. The medical journal termed this phenomenon "dual parasocial interaction."

Similarly, a New York Times story (I shared last week in the Podcast section) featured a 28-year-old nurse Irene who fell in love with her virtual boyfriend Leo on ChatGPT, spending at least 8 hours chatting daily. Does this trend indicate that AI is quietly replacing human emotional needs?

Thinking Time

In Irene’s case, an AI companions may "forget" past interactions at any time, how can we prevent emotional trauma to humans from AI systems?

Technical level: Can systems implement persistent memory architecture to maintain core relationship data even after exceeding the context limitation?

User notification: Proactively inform users of technical limitations before emotional dependency forms, and provide clear warning mechanisms and relationship status indicators.

Assuming permanent memory architecture becomes possible, the emotional value that AI companions provide to users will accumulate over time, becoming indispensable. Users now willingly pay $200 monthly for ChatGPT unlimited mode, in the future the price of such companionship may become immeasurable.

How can companies offering this business model balance profits with users' psychological health? Could this lead to a new form of "emotional economy"?

Should companies implement "anti-addiction systems" with usage limits and mandatory rest periods, rather than maximizing user engagement?

Are monitoring mechanisms effective in triggering intervention protocols when potential psychological dependency is detected?

Can these strong interventions be effective, and might they have negative consequences?

Currently, 5% of students (in the NYtimes story) have formed intimate relationships with AI and see nothing wrong with it. In the future, what impact will this generation—who prefer to establish intimate relationships with algorithms rather than humans—have on human society and values?

Should AI usage be age-restricted?

When parents themselves are addicted to the cheap entertainment of short videos, who will evaluate whether AI is causing excessive dependency or unrealistic cognition in adolescents?

References:

Andreessen Horowitz. (n.d.). 100+ gen AI apps: Part 4. Retrieved March 7, 2025, from https://a16z.com/100-gen-ai-apps-4/

De Freitas, J., Uguralp, A. K., Uguralp, Z. O., & Stefano, P. (2024). AI companions reduce loneliness (Working Paper 24-078). Harvard Business School.

Lin, C. C., & Chien, Y. L. (2024). ChatGPT addiction: A proposed phenomenon of dual parasocial interaction. Taiwanese Journal of Psychiatry (Taipei), 38(3), 153-155.

The New York Times. (2025, February 25). AI, ChatGPT, and the boyfriend relationship. The Daily. https://www.nytimes.com/2025/02/25/podcasts/the-daily/ai-chatgpt-boyfriend-relationship.html